-

[CS231n] 7. Neural Networks Part 3 : Learning and EvaluationMachine Learning/CS231n 2022. 5. 5. 16:34728x90

gradient checks, sanity checks, babysitting the learning process, momentum(+nesterov), second-order methods, Adagrad/RMSprop, hyperparameter optimization, model ensembles

Learning

This section is devoted to the dynamics, the process of learning the parameters and finding good hyperparameters

Gradient Checks

- Use the centered formula

finite difference approximation when evaluating the numerical gradient looks as follows:

In practice, it turns out that it is much better to use the centered difference formula of the form:

- Use relative error for the comparison

What are the details of comparing the numerical gradient f′n and analytic gradient f′a?

→ considers their ratio of the differences to the ratio of the absolute values of both gradients.

- relative error > 1e-2 usually means the gradient is probably wrong

- 1e-2 > relative error > 1e-4 should make you feel uncomfortable

- 1e-4 > relative error is usually okay for objectives with kinks. But if there are no kinks (e.g. use of tanh nonlinearities and softmax), then 1e-4 is too high.

- 1e-7 and less you should be happy.

the deeper the network, the higher the relative errors will be.

→ So if you are gradient checking the input data for a 10-layer network, a relative error of 1e-2 might be okay because the errors build up on the way.

Before learning : sanity checks Tips/Tricks

a few sanity checks you might consider running before you plunge into expensive optimization:

- Look for correct loss at chance performance

Make sure you’re getting the loss you expect when you initialize with small parameters.

first check the data loss alone (so set regularization strength to zero) - As a second sanity check, increasing the regularization strength should increase the loss.

- Overfit a tiny subset of data

Lastly and most importantly, before training on the full dataset try to train on a tiny portion of data and make sure you can achieve zero cost.

Unless you pass this sanity check with a small dataset it is not worth proceeding to the full dataset.

Babysitting the learning proess

There are multiple useful quantities you should monitor during training of a neural network.

- The x-axis of the plots below are always in units of epochs, which measure how many times every example has been seen during training in expectation (e.g. one epoch means that every example has been seen once).

Loss function

The loss is evaluated on the individual batches during the forward pass.

Left: A cartoon depicting the effects of different learning rates. With low learning rates the improvements will be linear. With high learning rates they will start to look more exponential. Higher learning rates will decay the loss faster, but they get stuck at worse values of loss (green line). This is because there is too much "energy" in the optimization and the parameters are bouncing around chaotically, unable to settle in a nice spot in the optimization landscape.

Right: An example of a typical loss function over time, while training a small network on CIFAR-10 dataset. This loss function looks reasonable (it might indicate a slightly too small learning rate based on its speed of decay, but it's hard to say), and also indicates that the batch size might be a little too low (since the cost is a little too noisy).- The amount of “wiggle” in the loss is related to the batch size.

→ When the batch size is the full dataset, the wiggle will be minimal because every gradient update should be improving the loss function monotonically (unless the learning rate is set too high). - Some people prefer to plot their loss functions in the log domain.

→ the plot appears as a slightly more interpretable straight line

→ if multiple cross-validated models are plotted on the same loss graph, the differences between them become more apparent

Train/Val accuracy

This plot can give you valuable insights into the amount of overfitting in your model

The gap between the training and validation accuracy indicates the amount of overfitting. Two possible cases are shown in the diagram.

- The blue validation error curve shows very small validation accuracy compared to the training accuracy, indicating strong overfitting (note, it's possible for the validation accuracy to even start to go down after some point). When you see this in practice you probably want to increase regularization (stronger L2 weight penalty, more dropout, etc.) or collect more data.

- The other possible case is when the validation accuracy tracks the training accuracy fairly well. This case indicates that your model capacity is not high enough: make the model larger by increasing the number of parameters.

Ratio of weights : updates

updates, not the raw gradients (e.g. in vanilla sgd this would be the gradient multiplied by the learning rate).

- A rough heuristic is that this ratio should be somewhere around 1e-3.→ If it is higher then the learning rate is likely too high.

- → If it is lower than this then the learning rate might be too low.

# assume parameter vector W and its gradient vector dW param_scale = np.linalg.norm(W.ravel()) update = -learning_rate*dW # simple SGD update update_scale = np.linalg.norm(update.ravel()) W += update # the actual update print update_scale / param_scale # want ~1e-3Activation / Gradient distributions per layer

An incorrect initialization can slow down or even completely stall the learning process. → plot activation/gradient histograms for all layers of the network.

- Intuitively, it is not a good sign to see any strange distributions

→ with tanh neurons we would like to see a distribution of neuron activations between the full range of [-1,1]

→ instead of seeing all neurons outputting zero, or all neurons being completely saturated at either -1 or 1.

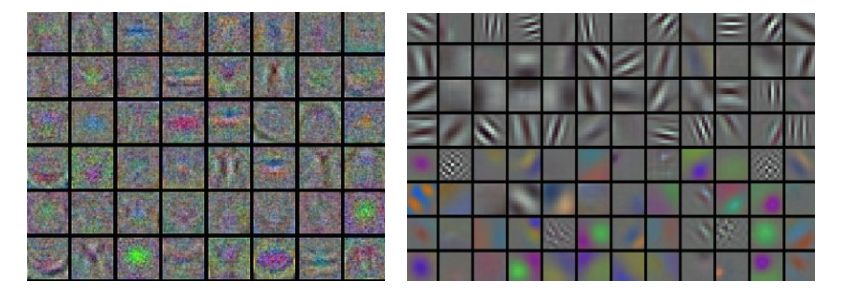

First-layer Visualizations

When one is working with image pixels it can be helpful and satisfying to plot the first-layer features visually

Left: Noisy features indicate could be a symptom: Unconverged network, improperly set learning rate, very low weight regularization penalty.

Right: Nice, smooth, clean and diverse features are a good indication that the training is proceeding well.

Parameter updates

Once the analytic gradient is computed with backpropagation, the gradients are used to perform a parameter update. There are several approaches for performing the update

SGD and bells and whistles

- Vanilla update

The simplest form of update is to change the parameters along the negative gradient direction

# Vanilla update x += - learning_rate * dxWhen evaluated on the full dataset, and when the learning rate is low enough, this is guaranteed to make non-negative progress on the loss function.

- Momentum update

Initializing the parameters with random numbers is equivalent to setting a particle with zero initial velocity at some location. The optimization process can then be seen as equivalent to the process of simulating the parameter vector (i.e. a particle) as rolling on the landscape. The physics view suggests an update in which the gradient only directly influences the velocity, which in turn has an effect on the position:

# Momentum update v = mu * v - learning_rate * dx # integrate velocity x += v # integrate position→ Here we see an introduction of a

vvariable that is initialized at zero, and an additional hyperparameter (mu).As an unfortunate misnomer, this variable is in optimization referred to as momentum (its typical value is about 0.9), but its physical meaning is more consistent with the coefficient of friction.

When cross-validated, this parameter is usually set to values such as [0.5, 0.9, 0.95, 0.99].

- Nesterov Momentum

It enjoys stronger theoretical converge guarantees for convex functions and in practice it also consistenly works slightly better than standard momentum.

Nesterov momentum. Instead of evaluating gradient at the current position (red circle), we know that our momentum is about to carry us to the tip of the green arrow. With Nesterov momentum we therefore instead evaluate the gradient at this "looked-ahead" position.

x_ahead = x + mu * v # evaluate dx_ahead (the gradient at x_ahead instead of at x) v = mu * v - learning_rate * dx_ahead x += vThe parameter vector we are actually storing is always the ahead version. The equations in terms of x_ahead (but renaming it back to x) then become:

v_prev = v # back this up v = mu * v - learning_rate * dx # velocity update stays the same x += -mu * v_prev + (1 + mu) * v # position update changes formAnnealing the learning rate

In training deep networks, it is usually helpful to anneal the learning rate over time.

- Step decay

Reduce the learning rate by some factor every few epochs.

One heuristic you may see in practice is to watch the validation error while training with a fixed learning rate, and reduce the learning rate by a constant (e.g. 0.5) whenever the validation error stops improving. - Exponential decay

α=α_0*e^(−kt)where α_0, k are hyperparameters and t is the iteration number - 1/t decay

α=α_0/(1+kt)where a_0,k are hyperparameters and t is the iteration number.

In practice, we find that the step decay is slightly preferable because the hyperparameters it involves (the fraction of decay and the step timings in units of epochs) are more interpretable than the hyperparameter k

- Lastly, if you can afford the computational budget, err on the side of slower decay and train for a longer time.

Per-parameter adaptive learning rate methods

They are well-behaved for a broader range of hyperparameter values than the raw learning rate.

- Adagrad

adaptive learning rate method

# Assume the gradient dx and parameter vector x cache += dx**2 x += - learning_rate * dx / (np.sqrt(cache) + eps)→ the variable

cachehas size equal to the size of the gradient, and keeps track of per-parameter sum of squared gradients.The weights that receive high gradients will have their effective learning rate reduced, while weights that receive small or infrequent updates will have their effective learning rate increased. The square root operation turns out to be very important In case of Deep Learning, the monotonic learning rate usually proves too aggressive and stops learning too early.

- RMSprop

RMSprop is a very effective, but currently unpublished adaptive learning rate method.

cache = decay_rate * cache + (1 - decay_rate) * dx**2 x += - learning_rate * dx / (np.sqrt(cache) + eps)→ Here,

decay_rateis a hyperparameter and typical values are [0.9, 0.99, 0.999].Notice that the

x+=update is identical to Adagrad, but thecachevariable is a “leaky”- Adam

It is a recently proposed update that looks a bit like RMSProp with momentum.

m = beta1*m + (1-beta1)*dx v = beta2*v + (1-beta2)*(dx**2) x += - learning_rate * m / (np.sqrt(v) + eps)→ Recommended values in the paper are

eps = 1e-8,beta1 = 0.9,beta2 = 0.999.In practice Adam is currently recommended as the default algorithm to use, and often works slightly better than RMSProp.

The full Adam update also includes a bias correction mechanism, which compensates for the fact that in the first few time steps the vectorsm, vare both initialized and therefore biased at zero, before they fully “warm up”.# t is your iteration counter going from 1 to infinity m = beta1*m + (1-beta1)*dx mt = m / (1-beta1**t) v = beta2*v + (1-beta2)*(dx**2) vt = v / (1-beta2**t) x += - learning_rate * mt / (np.sqrt(vt) + eps)

Animations that may help your intuitions about the learning process dynamics. Contours of a loss surface and time evolution of different optimization algorithms. Notice the "overshooting" behavior of momentum-based methods, which make the optimization look like a ball rolling down the hill.

A visualization of a saddle point in the optimization landscape, where the curvature along different dimension has different signs (one dimension curves up and another down). Notice that SGD has a very hard time breaking symmetry and gets stuck on the top. Conversely, algorithms such as RMSprop will see very low gradients in the saddle direction. Due to the denominator term in the RMSprop update, this will increase the effective learning rate along this direction, helping RMSProp proceed. Images credit: Alec Radford.

Hyperparameter optimization

The most common hyperparameters in context of Neural Networks include:

- the initial learning rate

- learning rate decay schedule (such as the decay constant)

- regularization strength (L2 penalty, dropout strength)

Prefer one validation fold to cross-validation- In most cases a single validation set of respectable size substantially simplifies the code base, without the need for cross-validation with multiple folds.

Hyperparameter ranges- Search for hyperparameters on log scale.

Prefer random search to grid search- “randomly chosen trials are more efficient for hyper-parameter optimization than trials on a grid”

It is very often the case that some of the hyperparameters matter much more than others (e.g. top hyperparam vs. left one in this figure). Performing random search rather than grid search allows you to much more precisely discover good values for the important ones.

Careful with best values on border- Once we receive the results, it is important to double check that the final learning rate is not at the edge of this interval, or otherwise you may be missing more optimal hyperparameter setting beyond the interval.

Stage your search from coarse to fine- In practice, it can be helpful to first search in coarse ranges (e.g. 10 ** [-6, 1]), and then depending on where the best results are turning up, narrow the range.

Evaluation

Model Ensembles

In practice, one reliable approach to improving the performance of Neural Networks by a few percent is to train multiple independent models, and at test time average their predictions.

- Same model, different initializations

Use cross-validation to determine the best hyperparameters, then pick the top few (e.g. 10) models to form the ensemble. In practice, this can be easier to perform since it doesn’t require additional retraining of models after cross-validation - Different checkpoints of a single model

If training is very expensive, some people have had limited success in taking different checkpoints of a single network over time (for example after every epoch) and using those to form an ensemble. Clearly, this suffers from some lack of variety, but can still work reasonably well in practice. The advantage of this approach is that is very cheap. - Running average of parameters during training

Related to the last point, a cheap way of almost always getting an extra percent or two of performance is to maintain a second copy of the network’s weights in memory that maintains an exponentially decaying sum of previous weights during training. You will find that this “smoothed” version of the weights over last few steps almost always achieves better validation error.

Summary

To train a Neural Network:

- Gradient check your implementation with a small batch of data and be aware of the pitfalls.

- As a sanity check, make sure your initial loss is reasonable, and that you can achieve 100% training accuracy on a very small portion of the data

- During training, monitor the loss, the training/validation accuracy, and if you’re feeling fancier, the magnitude of updates in relation to parameter values (it should be ~1e-3), and when dealing with ConvNets, the first-layer weights.

- The two recommended updates to use are either SGD+Nesterov Momentum or Adam.

- Decay your learning rate over the period of the training. For example, halve the learning rate after a fixed number of epochs, or whenever the validation accuracy tops off.

- Search for good hyperparameters with random search (not grid search). Stage your search from coarse (wide hyperparameter ranges, training only for 1-5 epochs), to fine (narrower rangers, training for many more epochs)

- Form model ensembles for extra performance

728x90'Machine Learning > CS231n' 카테고리의 다른 글

[CS231n] 9. Convolutional Neural Networks: Layer Patterns, Case studies (0) 2022.05.13 [CS231n] 8. Convolutional Neural Networks: Architectures, Pooling Layers (0) 2022.05.12 [CS231n] 6. Neural Networks Part2 : Setting up the Data (0) 2022.05.03 [CS231n] 5. Neural Networks Part 1: Setting up the Architecture (0) 2022.04.29 [CS231n] 4. Backpropagation (0) 2022.04.27 - Use the centered formula